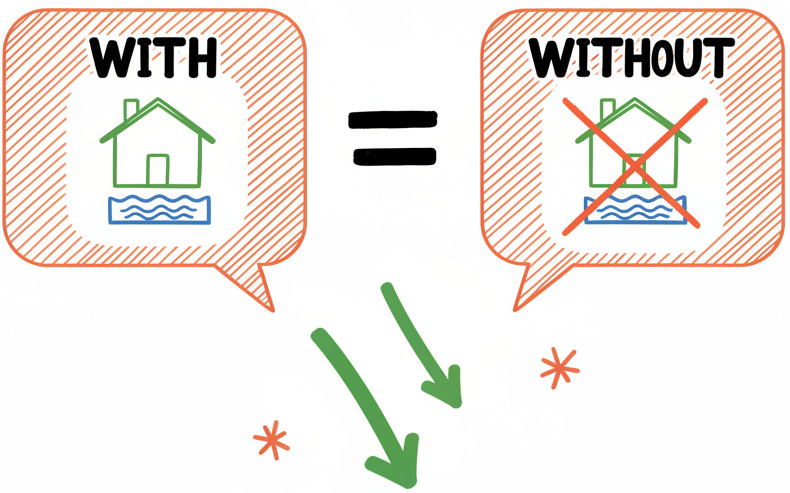

I embedded two queries: “home with pool” and “home without pool.” The cosine similarity was 0.82. The embedding model treats negated queries as nearly identical to their positive counterparts.

For comparison, completely unrelated queries (“home with pool” vs “quarterly earnings report”) scored 0.13.

When I searched 100,000 property listings, 3 of the top 10 results appeared in both result sets. A search for “not pet friendly” returned a listing advertising “pet-friendly amenities” at position #4.

I share the experiments in the following video if you would like to see the methodology:

Why this happens

Embedding models optimize for topical similarity, not logical inference. “Home without pool” is still topically about homes and pools, so it lands near “home with pool” in vector space.

Think geometrically. Adding “no” doesn’t flip the arrow 180 degrees. It nudges it a few degrees. The dominant semantic content overwhelms the negation signal.

What to do about it

Metadata filtering: If “has_pool” is a column, use a WHERE clause. Let SQL handle Boolean logic.

Full text search: Traditional keyword search handles negation through Boolean operators. Search for “pool” AND NOT “no pool” and you get exact matches.

Rerankers: Cross-encoders can process the query and document together, catching negation that bi-encoders miss.

SQL Server 2025 supports hybrid search, combining vector similarity with full text and metadata filters in a single query.

The takeaway

Vector search is powerful for fuzzy semantic matching. But it may not understand “no” for you. Layer it with filters, full text search, or rerankers.

Like this content? I recently published SQL Server 2025 Vector Search Essentials, a hands-on course covering vector search from fundamentals to production patterns.