In my last post, I showed that vector search treats “home with pool” and “home without pool” as nearly identical (0.82 similarity). Bi-encoders struggle with negation.

Cross-encoders can help with this. But there’s a trade-off.

The difference

Bi-encoders (standard embedding models) encode the query and document separately, then compare the vectors. This is fast because you can pre-compute document embeddings. But encoding separately means the model never sees “without” in the context of the document’s “pool.”

Cross-encoders process the query and document together. The model sees both texts simultaneously, so it can reason about whether “without pool” matches a document describing a pool. Joint attention catches what separate encoding misses.

The experiment

I ran the queries from my previous test through ms-marco-MiniLM-L6-v2, a cross-encoder model trained on Microsoft’s MS-MARCO passage retrieval dataset. This model is free, open-source, and runs locally via the sentence-transformers library.

I retrieved 50 candidates using vector search (the fast, negation-blind method). The no-pool homes were buried at positions 15-50. Then I reranked all 50 using the cross-encoder.

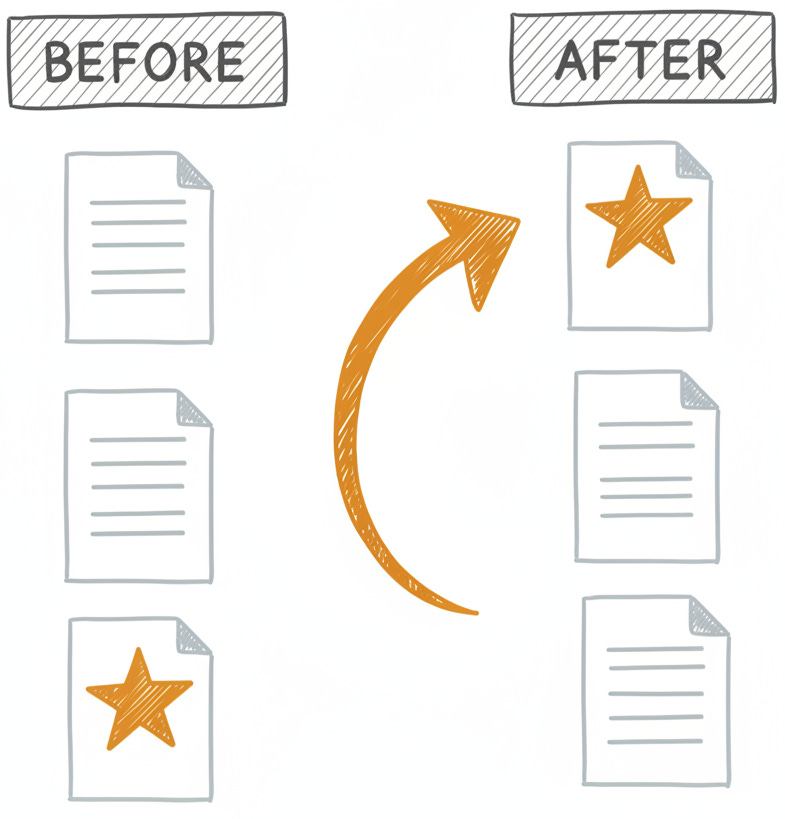

Before reranking, pool homes dominated the top results. The no-pool homes that should have ranked first were scattered throughout positions 15-50.

After reranking, the cross-encoder surfaced those buried no-pool homes to the top 10. Pool homes dropped with much lower relevance scores. The model understood that “without pool” should not match properties describing pools.

The cost

Ballpark latency on my machine (CPU, PyTorch backend):

Bi-encoder: ~2ms per query (vectors pre-computed)

Cross-encoder: ~80ms for 10 candidates, ~400ms for 50 candidates

The pattern that works: retrieve 50-100 candidates with vector search, rerank with cross-encoder, return top 10. You need a large candidate pool because the “correct” results might be buried at position 30-80.

Running locally vs API

The ms-marco-MiniLM-L6-v2 model runs locally for free. Install sentence-transformers (pip install sentence-transformers) and it downloads from Hugging Face automatically.

For production, commercial options like Cohere Rerank (available on Amazon Bedrock) or Voyage AI rerankers offer managed infrastructure with usage-based pricing.

The hybrid approach

The practical solution combines multiple methods:

Metadata filters for explicit boolean attributes (has_pool, pet_friendly). If users can filter directly, let them. No reranking needed.

Vector search for semantic similarity (fuzzy matching, synonyms)

Cross-encoder rerank for nuanced queries that slip through both

SQL Server 2025 supports vector search and you can combine it with normal SQL predicates. Microsoft also documents hybrid search patterns (great post from Davide Mauri!) that fuse full-text and vector results with RRF reranking.

Like this content? I recently published SQL Server 2025 Vector Search Essentials, a hands-on course covering vector search from fundamentals to production patterns.